Hi!, I’m an AI Researcher specializing in Vision-Language Models and Generative AI. I earned my M.S. in Artificial Intelligence from Hanyang University, where I was advised by Prof. Dong-Jin Kim.

My primary research centers on Visual Prompt Tuning, with a specific focus on two core objectives: enhancing visual information through structured prompts and mitigating modality gaps between vision and language. I aim to integrate fine-grained representations to create more effective, interpretable, and robust prompts that align seamlessly with multi-modal applications. Recently, I have expanded my research scope to Text-to-Video generation, exploring novel ways to synthesize dynamic visual content.

If you are interested in working with me, please feel free to drop me an email through taewhan7725@gmail.com.

Research News

[12/2024] SIDA, SynC are accepted by ACMMM 2025

[12/2024] ViPCap is accepted by AAAI 2025

[09/2024] IFCap is accepted by EMNLP 2024

[03/2022] Is college students’ trajectory associated with academic performance? is accepted by Computers & Education 2022

Selected Publications

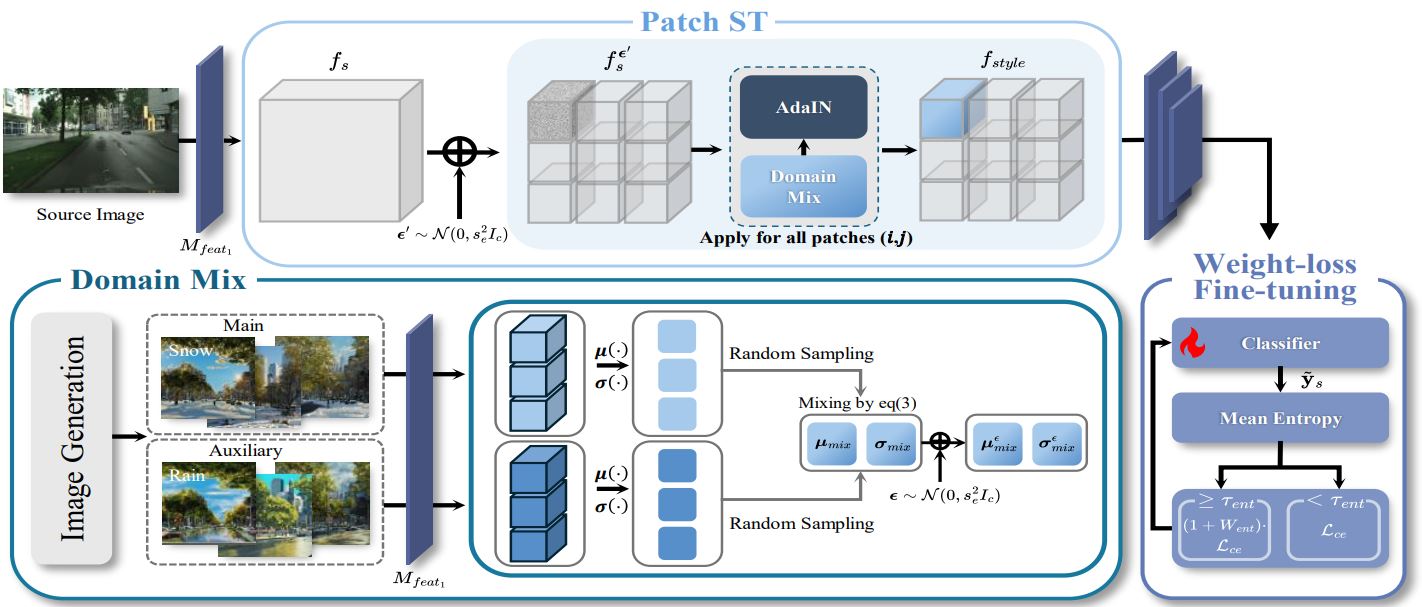

| SIDA: Synthetic Image Driven Zero-shot Domain Adaptation.Ye-Chan Kim, SeungJu Cha, Si-Woo Kim, Taewhan Kim, Dong-Jin Kim.ACMMM, 2025 |

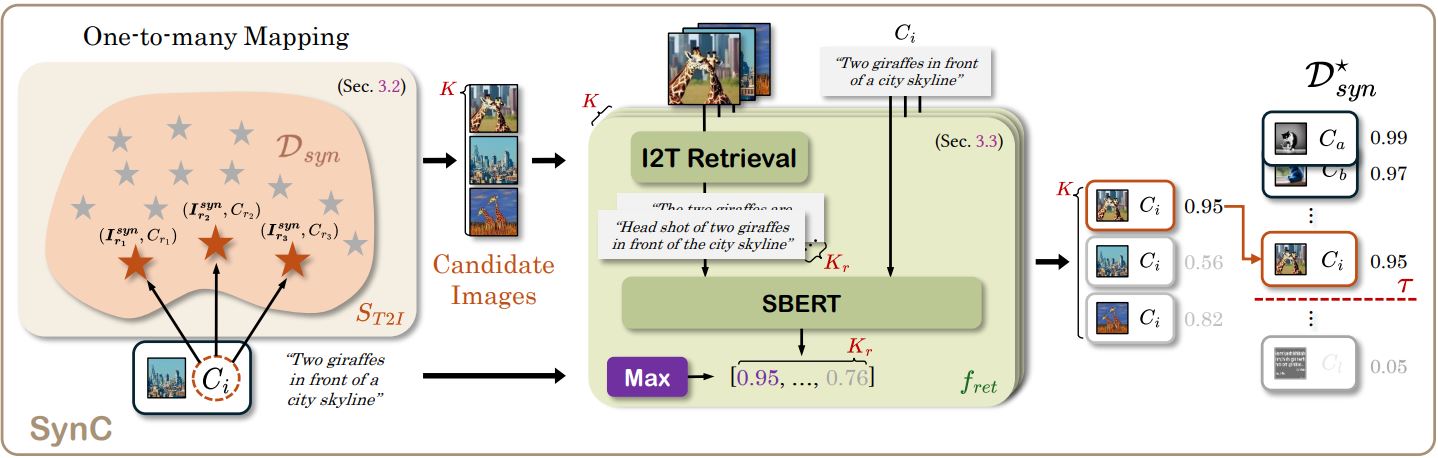

| SynC: Synthetic Image Caption Dataset Refinement with One-to-many Mapping for Zero-shot Image Captioning.Si-Woo Kim, MinJu Jeon, Ye-Chan Kim, Soeun Lee, Taewhan Kim, Dong-Jin Kim.ACMMM, 2025 |

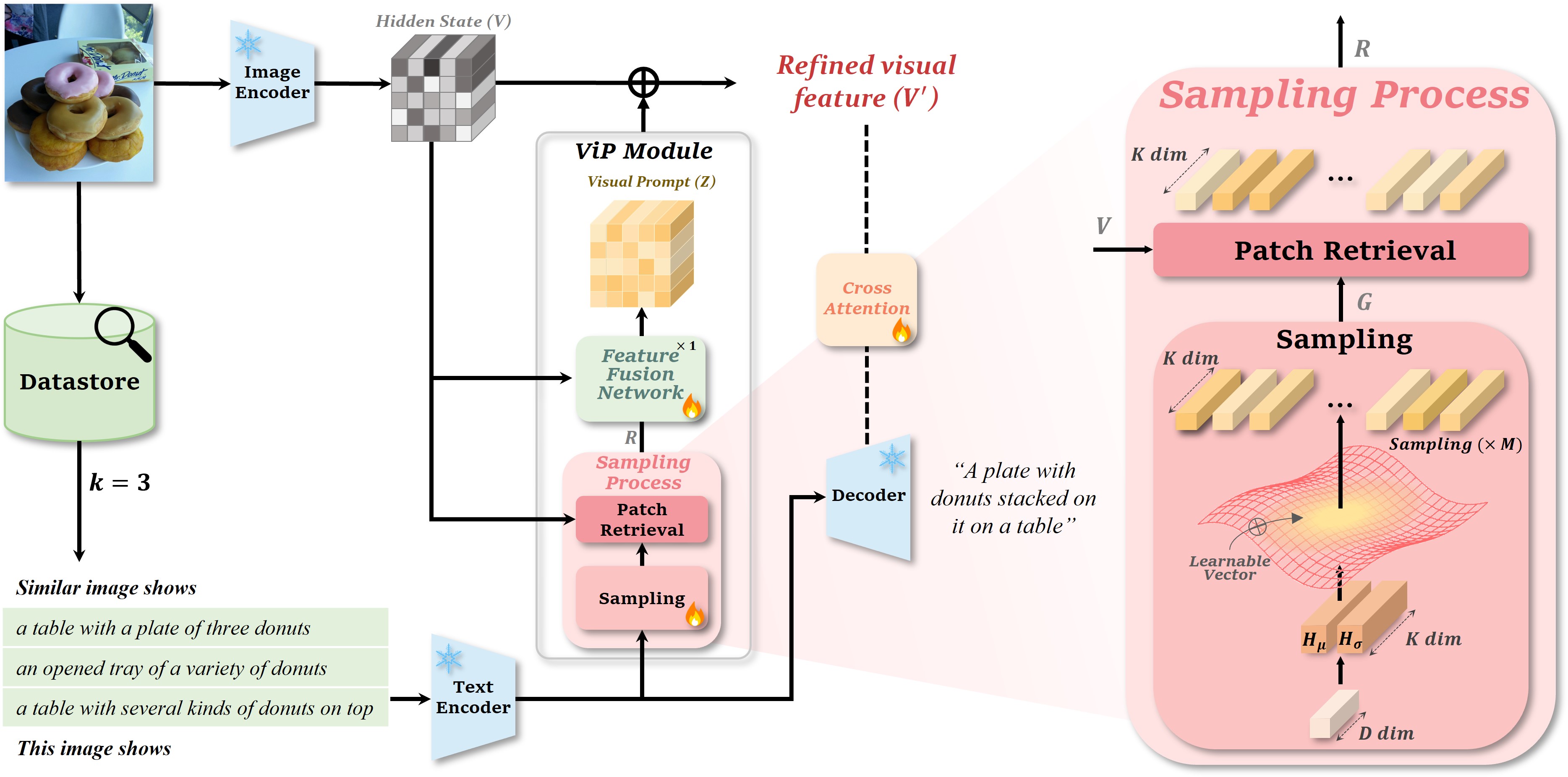

| ViPCap: Retrieval Text-Based Visual Prompts for Lightweight Image Captioning.Taewhan Kim, Soeun Lee, Si-Woo Kim, Dong-Jin Kim.AAAI, 2025 |

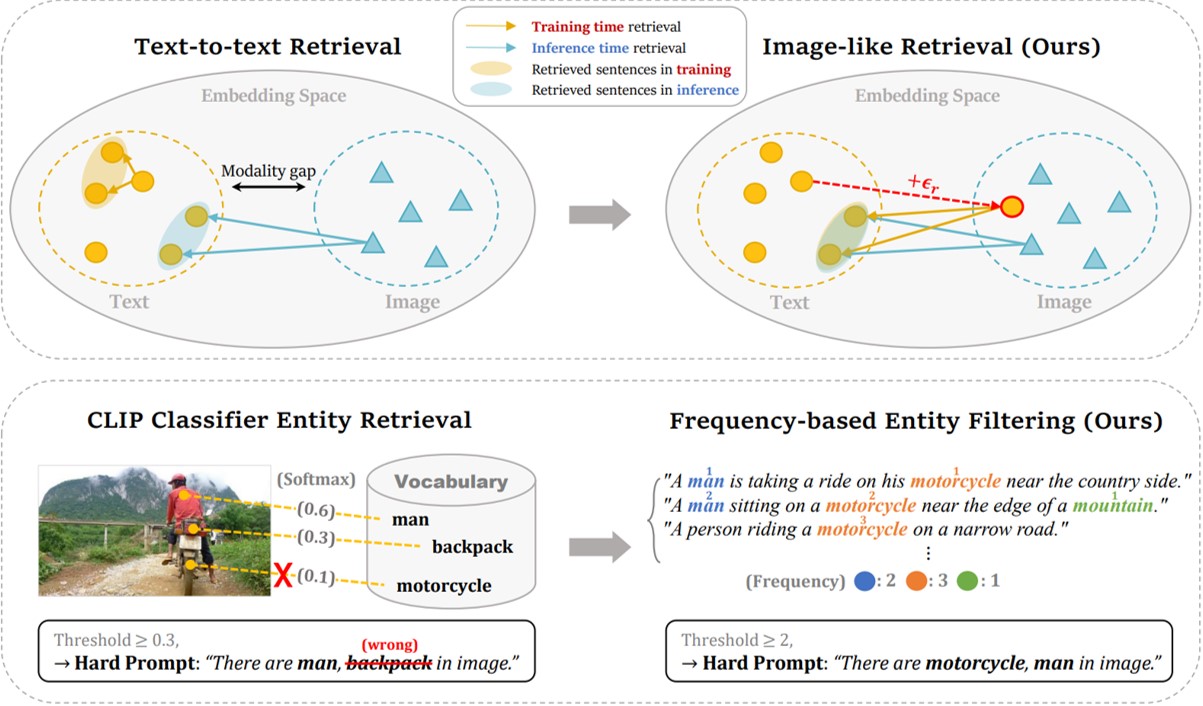

| IFCap: Image-like Retrieval and Frequency-based Entity Filtering for Zero-shot Captioning.Soeun Lee*, Si-Woo Kim*, Taewhan Kim, Dong-Jin Kim.EMNLP, 2024 |

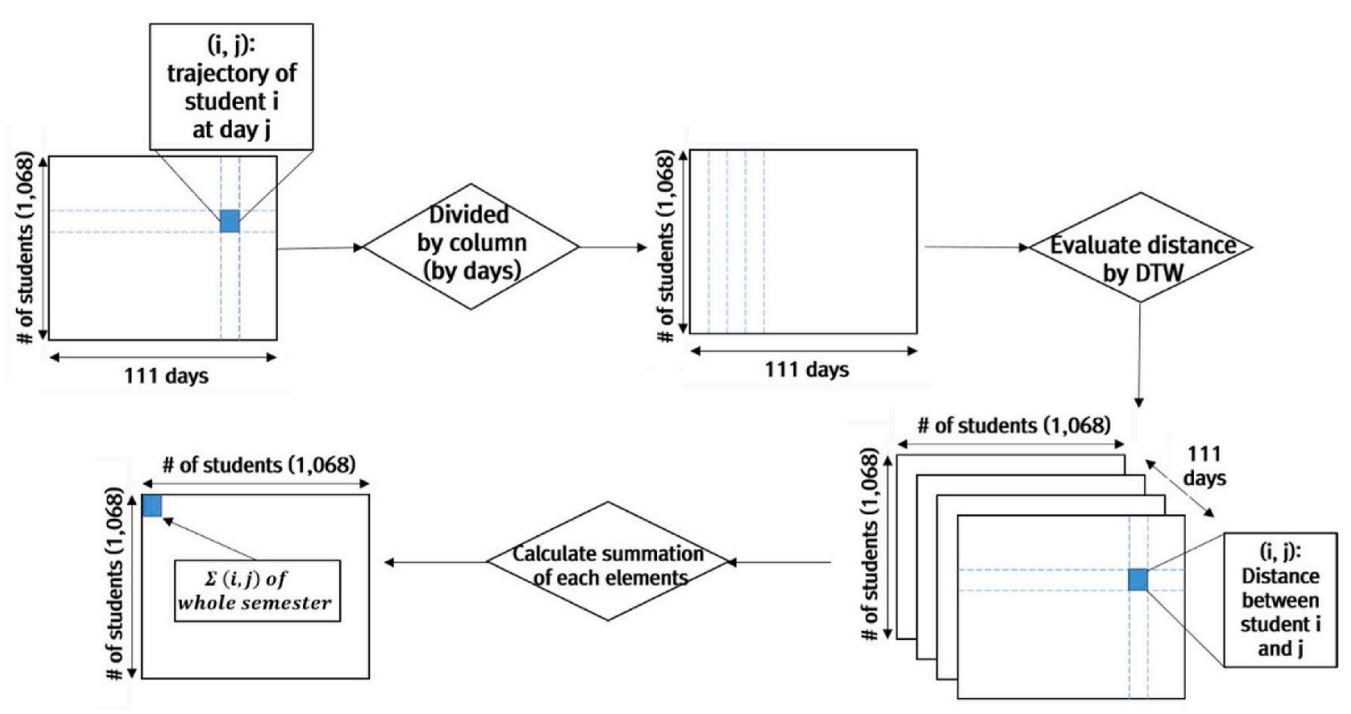

| Is college students’ trajectory associated with academic performance?.Hyoungjoon Lim, Soohyun Kim, Kyong-Mee Chung, Kangjae Lee, Taewhan Kim, Joon Heo.Computers & Education, 2022 |